One of the best things about email marketing is how easily you can experiment with different parts of your campaigns to measure how they’re working and to find ways to improve them for better results.

This process, called testing (or “experiments” within Iterable), is a best practice for email marketers because it helps you make better decisions about your campaigns. You can test everything and anything:

- Which subject line will prompt more readers to open and action the email?

- Do my subscribers react better to emotional appeals or cold, hard facts?

- Does a call to action placed higher up in the email draw more clicks than one farther down?

- Does an email sent in the morning generate more opens, clicks or conversions than one sent in the afternoon or late at night?

Iterable’s A/B Experiments feature makes setting up and running a test easy to do just by following a set of step-by-step guidelines. This allows you to update and optimize your campaigns faster and more efficiently, producing better results. You also can test both broadcast and automated or triggered campaigns.

However, you need a good strategy to guide your experiment design and operation. Whether you’re new to testing or looking to level up your game, this post will help you understand what’s at stake and how to create email experiments that will give you the greatest insight into the challenges you’re facing.

Why Experiment?

Testing with A/B Experiments will help you learn what prompts more of your customers to do the things you want them to do in your emails: to open them, visit your landing pages, buy a new product, sign up for an event, download information, create an account or any other goal you have for that campaign.

Testing usually shows marketers which variation of an email is more likely to drive higher revenue, but there’s much more you can do with a well-designed experiment.

When done properly, it will give you the kind of data you need to make better decisions about your email program, whether it’s to drive higher revenue immediately or to gain long-term insight about your customers to guide strategic planning as well as campaign design.

With a strong testing program, you don’t need to rely on instinct or guesswork, both of which can lead you far down the wrong path and cost you time, money, sales and engagement.

Instead, you can call up your data and tell your team, “Let’s use emotional language for our Mother’s Day promotion because our testing shows it’s more effective than humor.”

Think Before You Test

Reread the previous section, and you’ll see an important qualifier: “When done properly.”

Think back to your high school chemistry class for a minute. Setting up your experiments took more than a random assembly of beakers and heat sources. You needed to know why you were testing, what variables you were testing and what you hoped to learn from that test.

The same is true for testing in email marketing. Iterable can guide you through set-up and operation, but you must know what you want to learn from your test and what you need to test to get the most useful and accurate results.

Email Experiments: 4 Steps for Effective Testing

1. Identify your objective

Traditional A/B testing splits your email list and pits two variables against each other (Variable A and Variable B; hence, the name) to see which drove an uplift in your results. However, experimenting can both give you an uplift on your campaign and provide long-term insights into your customer base.

When you set up your test, think about what you can learn about your customers as well as which testable elements in your email—the subject line, the preheader, copy design and content, time of day and day of week to send, call to action, etc.—got you the uplift.

See if you can learn what motivates your customers to act. Are they driven more by fearing of losing or desire for a benefit? What will persuade them to buy more, or more often?

2. Aim to get information that will help you resolve a pain point

Identify a problem with your marketing program or business that you want to resolve with your email campaign. Maybe you need them to make that crucial first purchase, to buy more often or convert from a free user to a paid one.

Resolving this pain point becomes your experiment’s objective. Once you nail that down, you can set up your testing plan.

3. Think through your testing process

Before you start setting up the experiment, you have to do some critical thinking. Don’t skimp on this step in the process because you need it to set up the kind of experiment that will give you the most useful results or accurate insights. A proper process includes the following:

- Create a hypothesis. A hypothesis can be proved or disproved. It says, “I think that by making this change, it will cause this effect BECAUSE…” The answer will then be “true” or “false.” But we want to also know why, so we can begin to know our customers better; hence, why we add the all-important “because.” Here’s an example: “Loss aversion copy is a stronger motivator towards conversions than benefit-led copy because people hate losing out more than they enjoy benefiting.”

- Select the factor (or factors) to test: Now is the time to select the factor—e.g., subject line, copy, offer, frequency etc.—to test your hypothesis and make the changes. One of the many benefits to testing based on a hypothesis is that, in some cases, you are now free to test multiple factors. This is because a hypothesis gives you a broader field to investigate. Factor-led testing, although more common, can restrict the test’s learnings and usefulness.

- Ensure your results are valid. To ensure your results are statistically confident and not the result of errors or chance, you must select an appropriate sample size of your database. An online calculator can help you with this.

- Measure the metric nearest to your test. There can be many unaccounted-for variants along the path to conversion, and outliers can skew your results. That’s why it’s critical to measure the metric that your test can actually influence. For example, in the case of subject line tests, your success metric should be open rate, because it won’t accurately reflect an increase in average order size or some other revenue-level metric.

At this point, you should have the guidance you need to set up your test. Iterable users can click “A/B Experiments” in the side menu of the dashboard and then proceed to the last step below.

4. Analyze the results

Go back to your experiment and view the results in your dashboard. Check that they are statistically valid by using another online calculator. (Iterable will do this for you automatically.)

Your test plan should allow you to record not only the results (yes or no to your hypothesis) but also your findings.

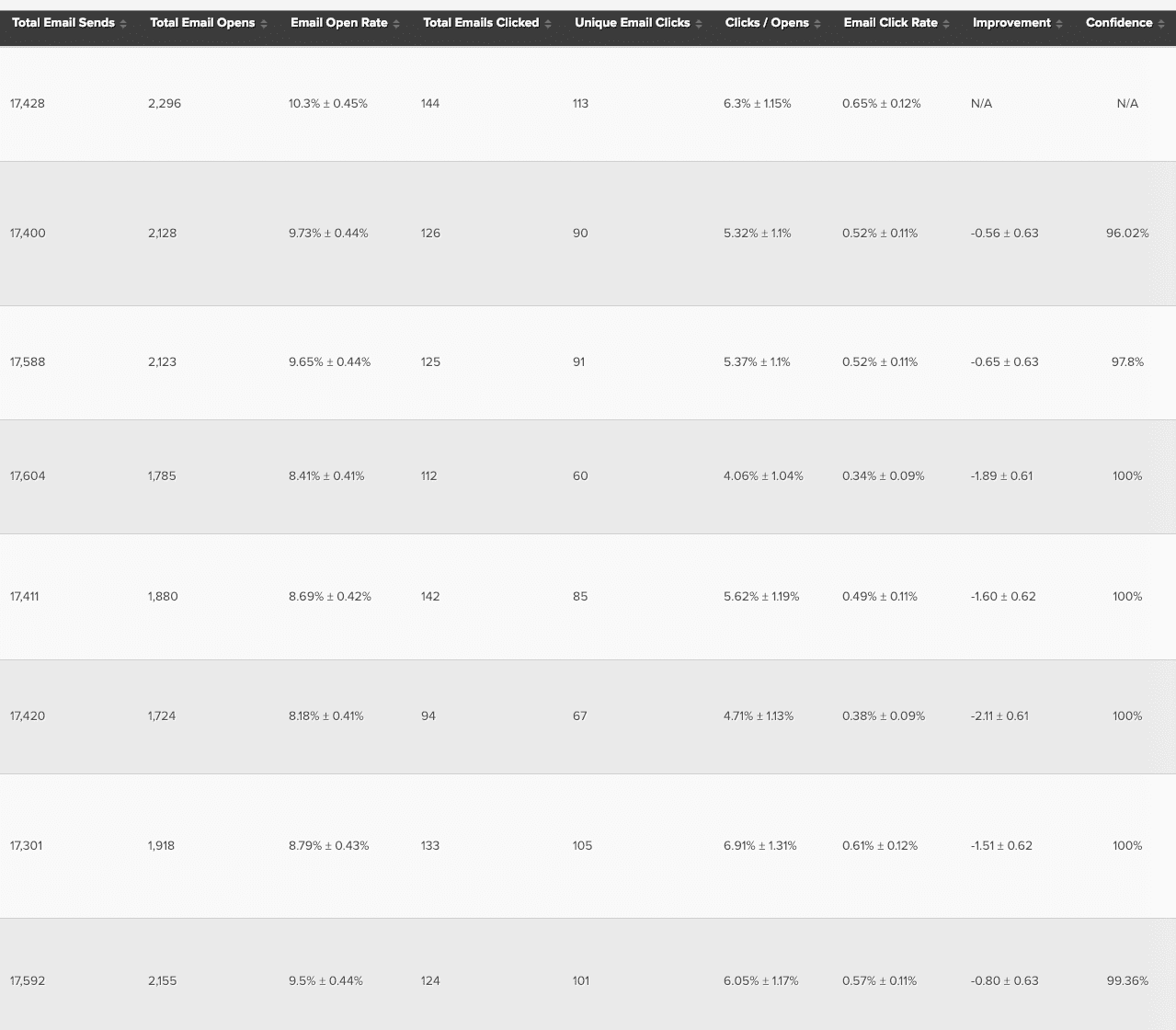

Here’s what the results page looks like for a subject-line test in Iterable (click the image to zoom in!):

This client tested eight different subject lines. The top entry is the control variant (hence why Improvement and Confidence are both N/A), and each of the other seven groups received a variation in the subject line. As you can see, the subject line sent to the control group (the No. 1 entry) won the test, based on using open rate as the success metric.

5 Best Practices for Email Experiments

Proper testing takes time and care to be sure your results reflect what’s really happening among your customers, not a fluke or errors in setting up your test. Keep these five practices in mind as you create your plan and set up your test:

- Always test the variants simultaneously to reduce the chance that time-based factors will skew your results.

- Test a statistically significant sample for accurate results.

- Review and gain insights from the empirical data collected, not your gut instinct.

- Test often, and build upon your learnings over time for the best results.

- Test only one hypothesis at a time so you know not only which campaign won but also why it won.

Another Testing Benefit: Applying Results Across Channels

Unless you operate only one marketing channel, you don’t communicate with your customers in a vacuum. Besides email, you have a website, likely a mobile app and SMS/push notifications, plus direct mail and other messaging. Often, what you learn from your email testing can inform your marketing efforts in those other channels.

Suppose your email tests show that your first-time buyers respond more enthusiastically to free shipping without a purchase limit, but your loyal customers—those who bought three or more times in 12 months or who belong to your loyalty club—prefer VIP access and exclusive offers.

Apply what you’ve learned to your web copy and page organization, to search keywords and their landing pages, to display ads and banners in remarketing campaigns, and see whether the findings relate to those customers as well.

Wrapping Up

Email experiments give you a solid basic testing structure that you can build on to sharpen your insights and improve your marketing efforts bit by bit across all channels. It’s another one of email’s superpowers that marketers so often overlook or ignore.

Ultimately, it’s another reason why investing time and money in email pays off across your entire marketing program.