Does your test strategy miss out on success because of flaws in how testing is approached?

Most advice about email testing focuses on specific tests rather than email test strategy.

Success means testing the right things the right way.

It’s not hard to learn: read on for four foundational roadblocks to the right way to run email tests.

1. Meek Tweaking

A meek tweak is a small change. As a rule, avoid meek tweaks, because small changes tend to create a small uplift in results.

Big changes are more likely to yield a bigger impact.

Here’s an example of a subject line meek tweak:

- We have got a little something for you, 3x points until 3rd

- We have got an extra something for you, 3x points until 3rd

The only difference is the word little vs. extra. While it’s not impossible that there would be a difference, it’s unlikely and at best it will be small. Not a fertile ground for testing.

It’s meek, not because it’s a one-word change but because it doesn’t change the power of the message. The two subject lines will be perceived as the same. The customer hears, “I’ll get 3x points” in both cases.

A meek tweak is one that doesn’t change the human perception.

However, it is possible that a one-word change can make a psychological difference.

ConversionXL found a 90% increase in form conversion rate on a webpage by changing the button call to action from “Start your free 30-day trial” to “Start my free 30-day trial.”

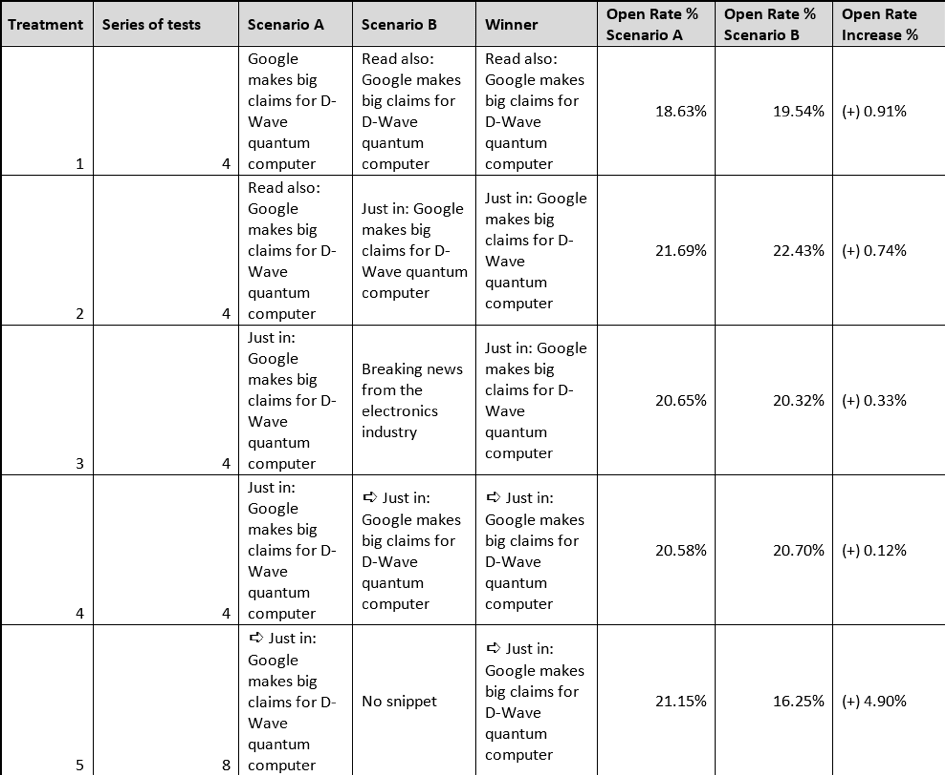

As seen below, this pre-header test from Marketing Experiments illustrates both meek and not meek tweaks.

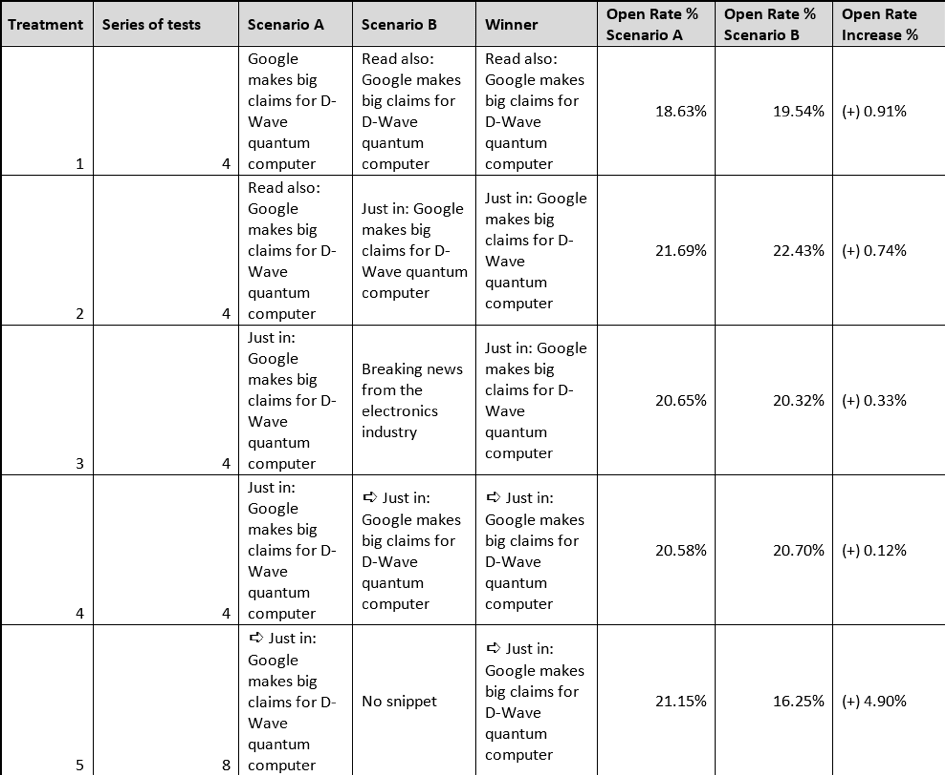

In this experiment, they ran five A/B split tests of the pre-header text.

Source: Marketing Experiments

Most tests were meek tweaks, such as adding an arrow symbol to the start of the text. The only test which wasn’t meek was the last one: treatment 5. This test was using pre-header against having no pre-header (no snippet).

While the first four treatments saw no statistically significant difference between the A and B, the last test had a 30% open rate uplift—a 4.9% percentage point increase.

In another example, eBay sends frequent emails with dynamically generated relevant products at attractive prices. The number of different subject lines they have found to get attention is impressive.

Here’s a selection of a few of them, no obvious meek tweaks to be seen.

- Selections for you!

- Tim, we’ve gathered some of your favorites together.

- Your own private shopping store.

- All the deals you want Tim!

- Tim, fancy finding something new?

- Shhh, don’t tell: We’re completely serious, enjoy ALL these…

- 😉 To: you, From: eBay – We picked you for something brilliant

- Tim, got deals on your mind?

- To: Tim, From: Us—still haven’t found what you’re looking for?

- Fancy finding yourself a gift, Tim?

- To: Tim, a little something from us! Guess what’s flying off our shelves….

How can this work for you? Test subject lines with different approaches.

- Clear benefit statement—say it like it is

- Curiosity-based

- Questioning

- Urgency-driven

- Using numbers, such as, “5 reasons to …”

- Loss aversion

- Single-topic subject line or multiple topics

The issue of meek tweaks applies to design and layout, as well as copy. When testing new designs, be bold and do more than just change the button color.

2. Only Running A/B Tests

“You’ve got to be in it to win it” comes to mind. You can’t win the lottery if you don’t play and the more often you play, the more likely you’ll get lucky.

Split testing is the same—if you only do a little bit, then you’re unlikely to be successful. Doing lots of tests is a key ingredient to testing success.

Though unlike with the lottery, you should be able to do many tests and get a win before you go bankrupt trying.

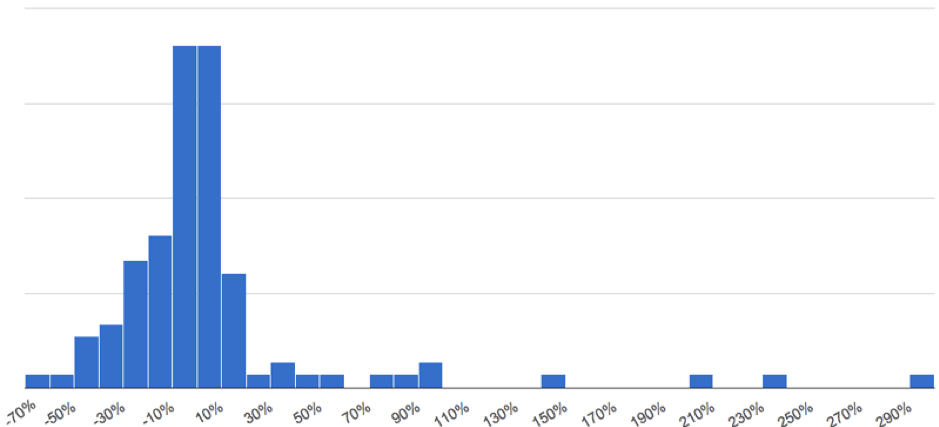

Experiment Engine analyzed some hundreds of web page tests to look at the average test uplift, which was 6%.

They plotted a histogram showing the number of tests against the uplift. In the chart below, the X-axis is the uplift achieved and the Y-axis is the number of tests that got that uplift.

Most tests resulted in a result somewhere between -10% and +10% uplift.

Very few deliver those 50%+ step change in performance uplifts we all want and a lot of tests end in a tie.

Source: Experiment Engine

Your chances of getting those tantalizing big wins are related to how often you try. You’re likely to be disappointed if you only run a couple of tests.

On the other hand, if you run 20 tests but don’t get a huge win, you’ll likely have a series of good wins that makes it worthwhile.

Running more tests is not the only way to increase testing. Create multiple test treatments for each test. Why only test two subject lines? Why not five or ten? Run A/B/C/D/… tests. Not just A/B tests.

The only limitation to how many tests you run is the size of your list. Using a test cell size of 5,000, you’ll need a list of 50,000 subscribers to run ten different subject lines.

Not only does it mean more chances to get a significant winner, but it also makes comparing results easier—factors like offer, timing and external effects don’t play a part.

Compare this with running successive A/B tests so you’re not comparing apples to oranges. An A/B test of curiosity against urgency one week can’t be easily compared with curiosity against loss aversion another week.

3. No Clear Test Rationale

It can be tempting to run a test just to see what happens. You see a subject line that appeals to you or read that a brand changed their button colors and saw improvements, so you decide to test the same.

Getting inspiration from other brands’ tests is fine. But before you test, have a clear idea why it could be important to your audience.

A simple way to create a test hypothesis to answer these two questions, being specific as you can:

- What do you believe is the obstacle to the desired action?

- Which test will solve the problem and verify or disprove the obstacle?

This test hypothesis answers both the questions and is specific.

For instance, testing a percentage-off vs. a dollar-off discount. Explicitly noting the amount saved instead of a percentage may make the value clearer to your customers.

To help you develop different hypotheses, seek wide input by asking yourself these questions.

- What do link click heatmaps suggest to you about customer behavior and interest?

- What do the non-marketing people in your organization say about the emails?

- What language is used by your sales or customer service teams? What questions are asked and how are obstacles removed?

- Do you get any feedback from customer surveys?

- What issues do your customer service team handle? What do these issues say about customer needs and expectations?

- How can you apply testing of other channels (push, SMS, in-app) to email?

4. Ignoring the Importance of Math

If you’ve ever played a game of chance, then you’ll know how sometimes you can go several rounds being unlucky.

If you’re not handed the good cards, then when the dice roll, the numbers you need won’t show. But certainly, the dice have all six numbers, even if you roll several times without throwing a 6.

Everyone understands that there is randomness involved in a casino game. The same is true of split testing, where there is an element of randomness in the results.

A winner is only a winner when it is statistically significant. That means a high degree of confidence that if you repeated the test the same winner would win.

Thankfully, math gives a clear answer.

The test cell sample size plays a key part in ensuring robust results. The bigger, the better—and for open rate or click rate tests a good number is 5,000+ contacts per test cell.

How quickly statistical significance is achieved is also impacted by the size of any uplift.

The good news is there is no need to do the math yourself: just use one of the many online calculators to check your results and plan future tests that make a real difference for your business.